Return of the Clustering

Kubernetes for Drupal

use ←↑↓→ or <space>

"Where's the links?"

We create and care for

Drupal-powered websites.

Minneapolis, MN (Mostly!)

Services

Site and hosting migratiton

Drupal 9 upgrades

Site audits

Process consultantcy

Ride the Whale

Docker for Drupalists

Avoid Deep Hurting!

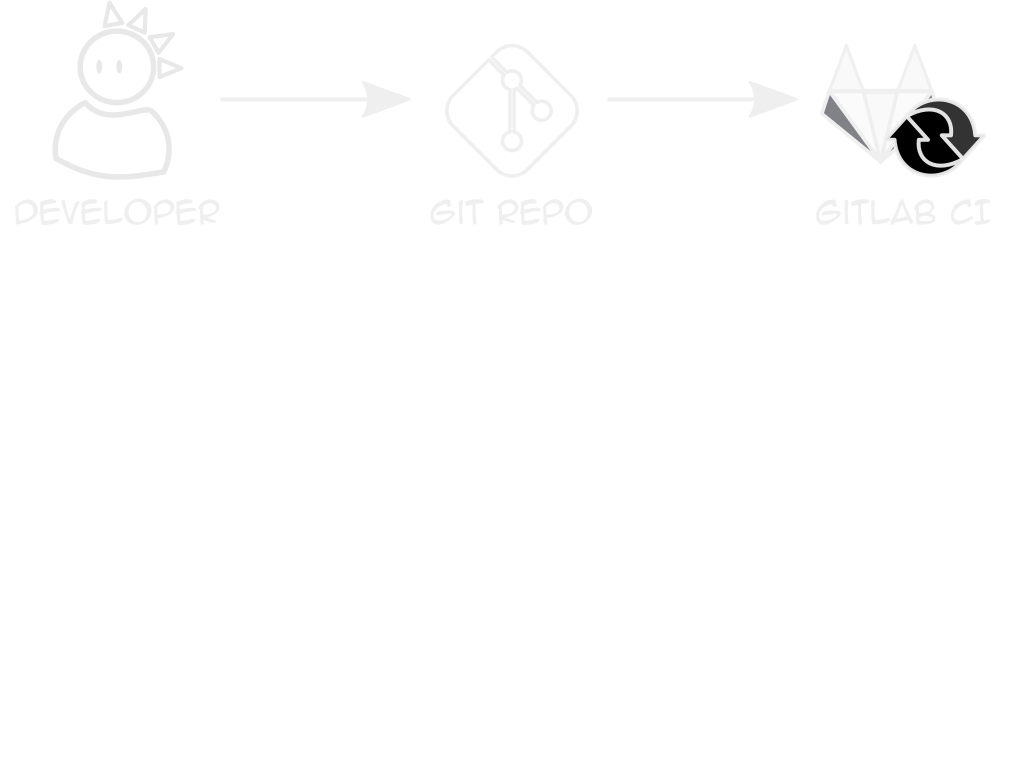

Deployments beyond git

Docker in Production?

Docker Security

Docker maps users between host and container

Most containers run...as root

Docker Security

Docker maps users between host and container

Most containers run...as root

Docker Security

Docker maps users between host and container

Most containers run...as root

Passing config

Avoid Compose-style environment variables

Use Secrets, Config Maps instead

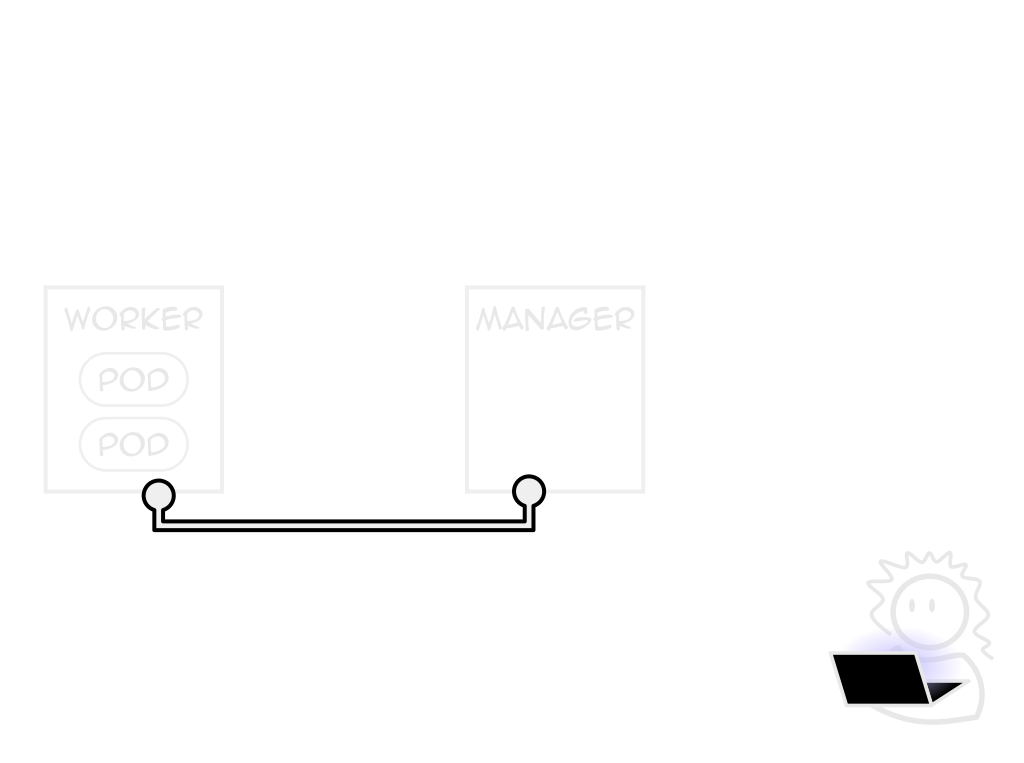

Single-node Docker

Runs all containers on the same system

Easy, but scaling is vertical only

orchestration

Coordinates hosts to act as one

Deploy instances, distribute load, deliver resources

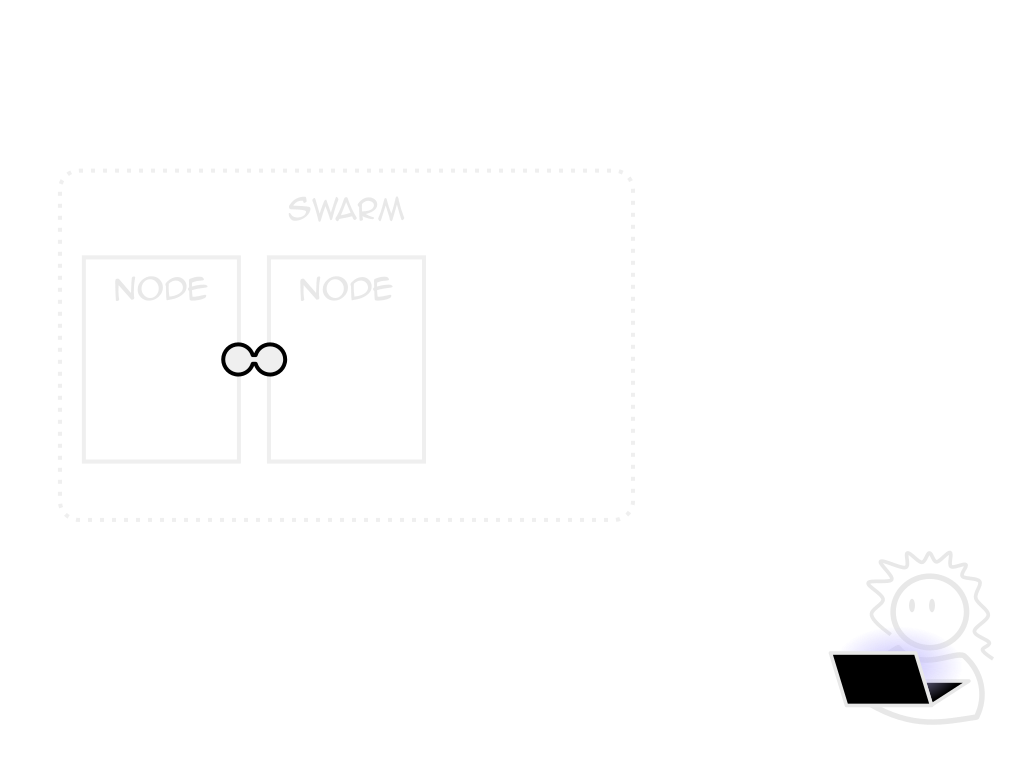

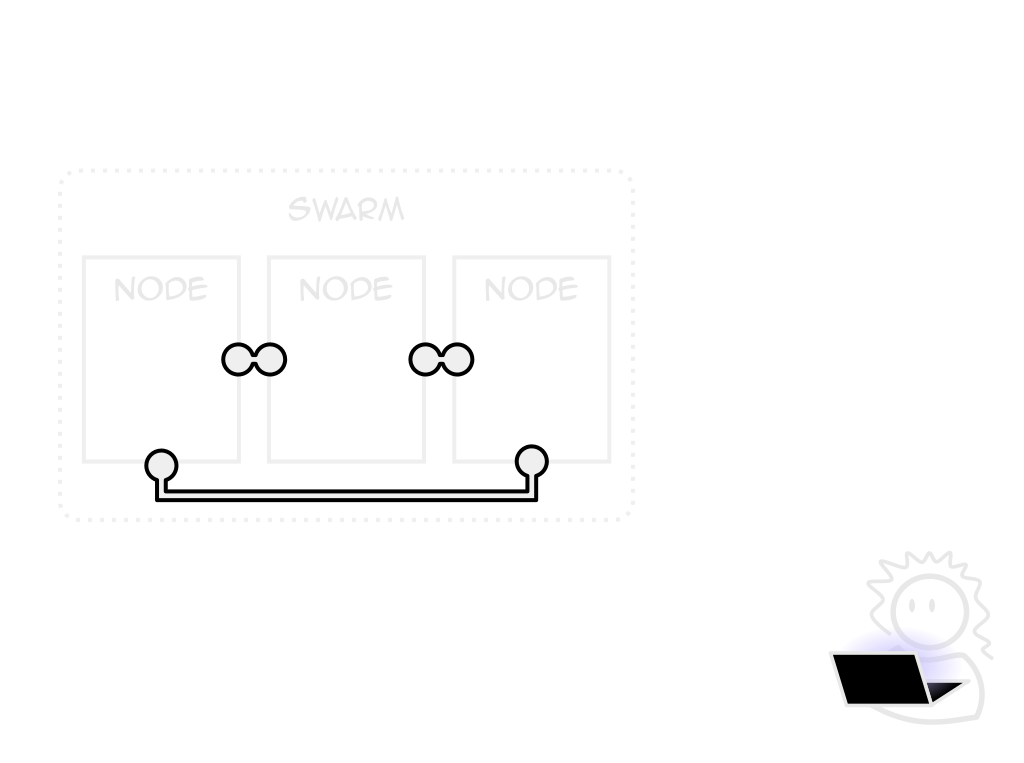

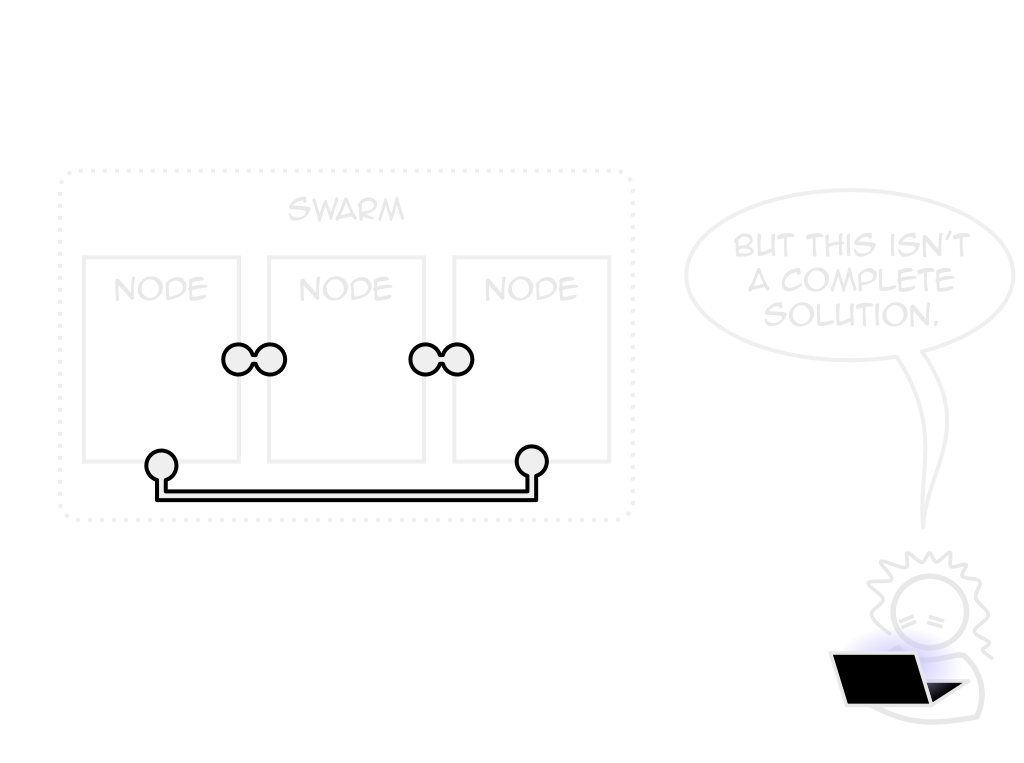

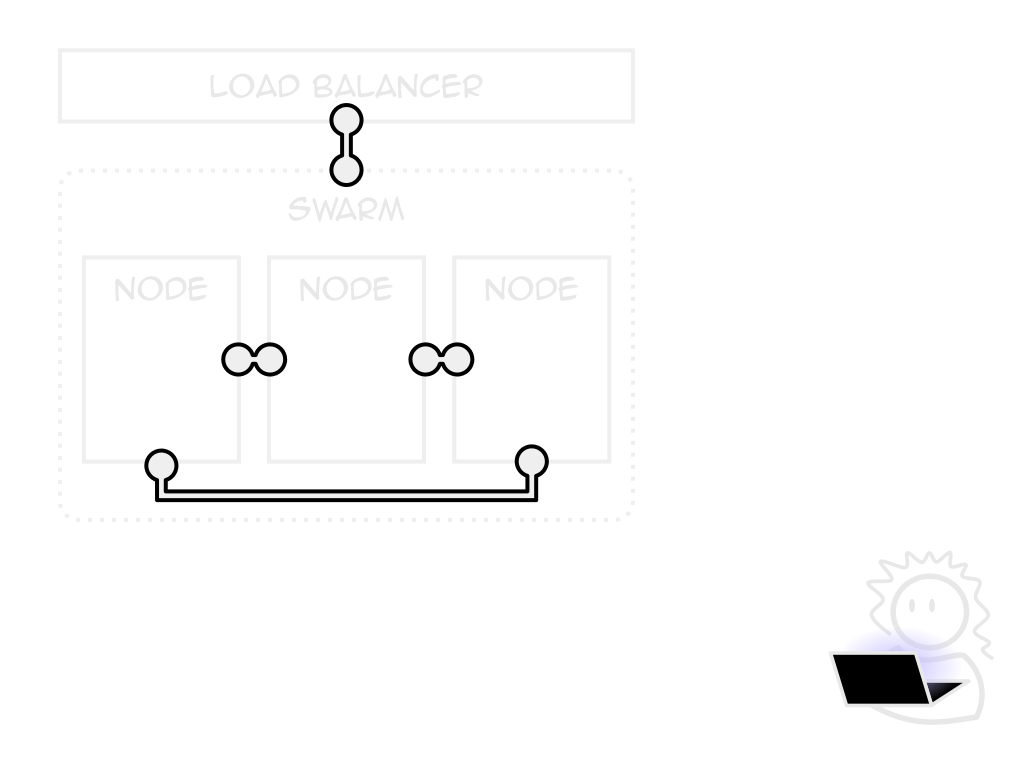

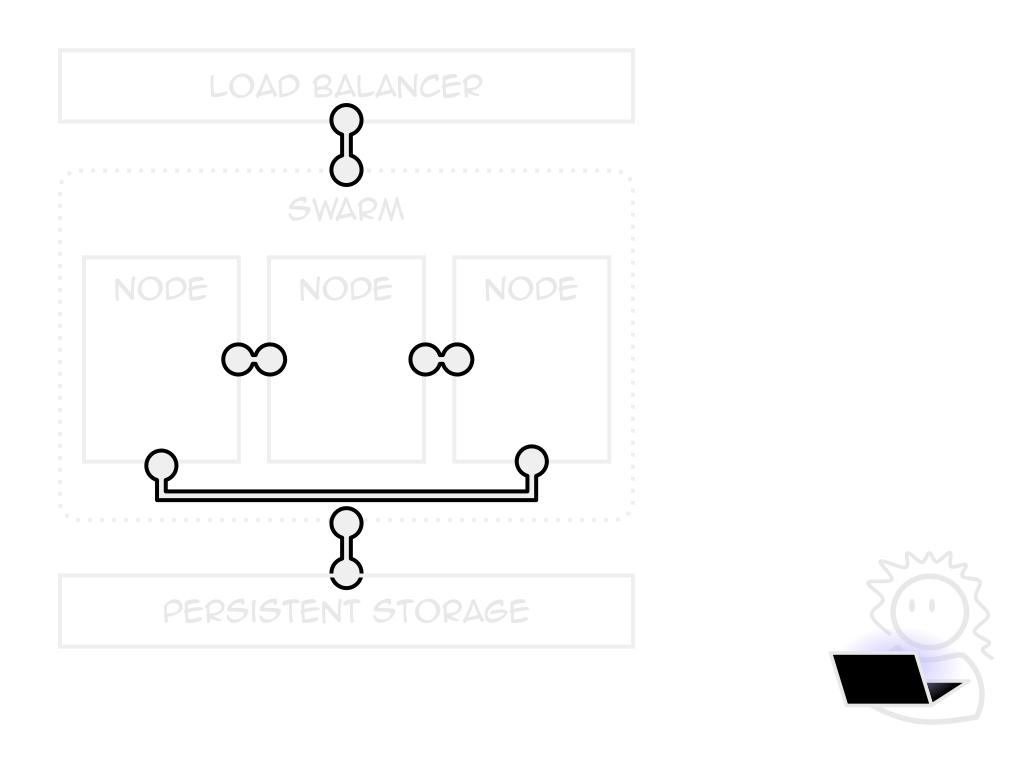

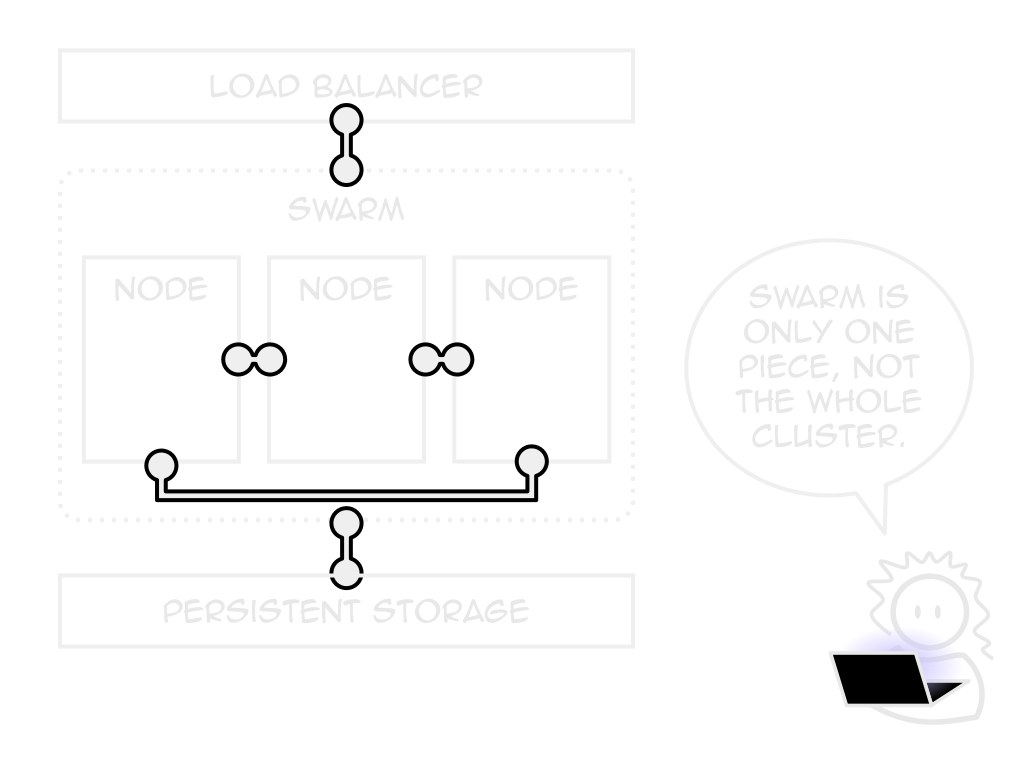

Swarm mode

Docker's own orchestrator

Built-in, free to use

Lack of hosting choice

Docker cloud was expensive (and discontinued)

Self-host, sort out LB and storage

Too "uncontrolled"

Containers only managable unit

Internal LB creates Drupal problems

Koo-ber-WHAT-ees?

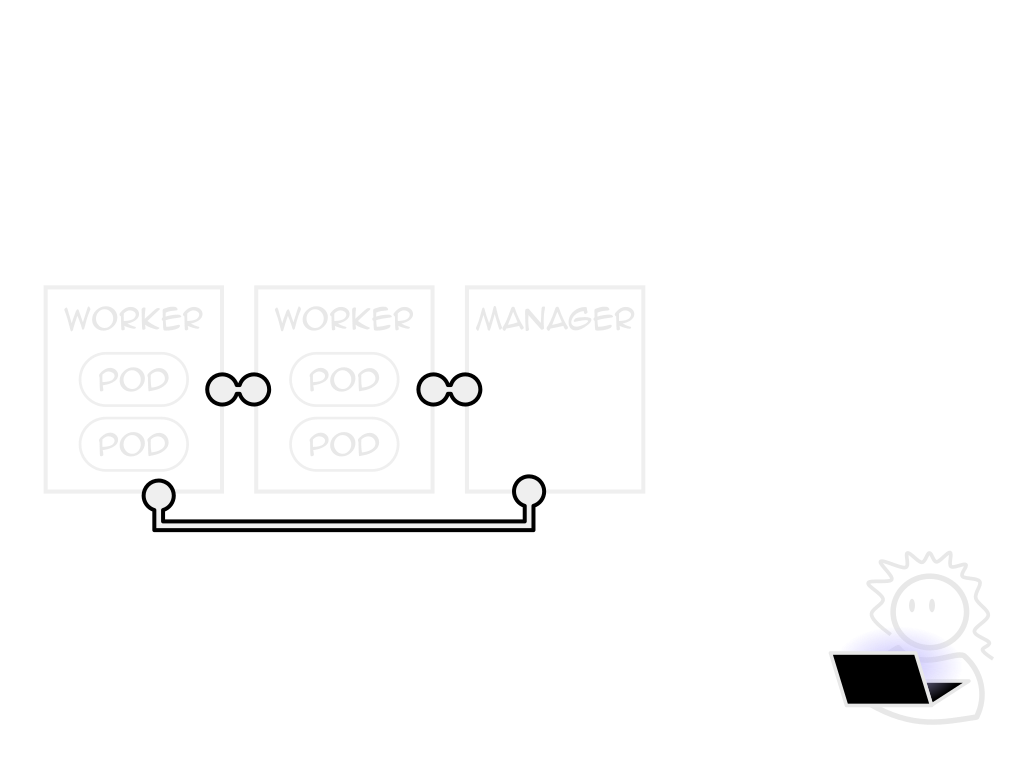

Kubernetes

Open-source container orchestrator

Created by Google, maintained by CNCF

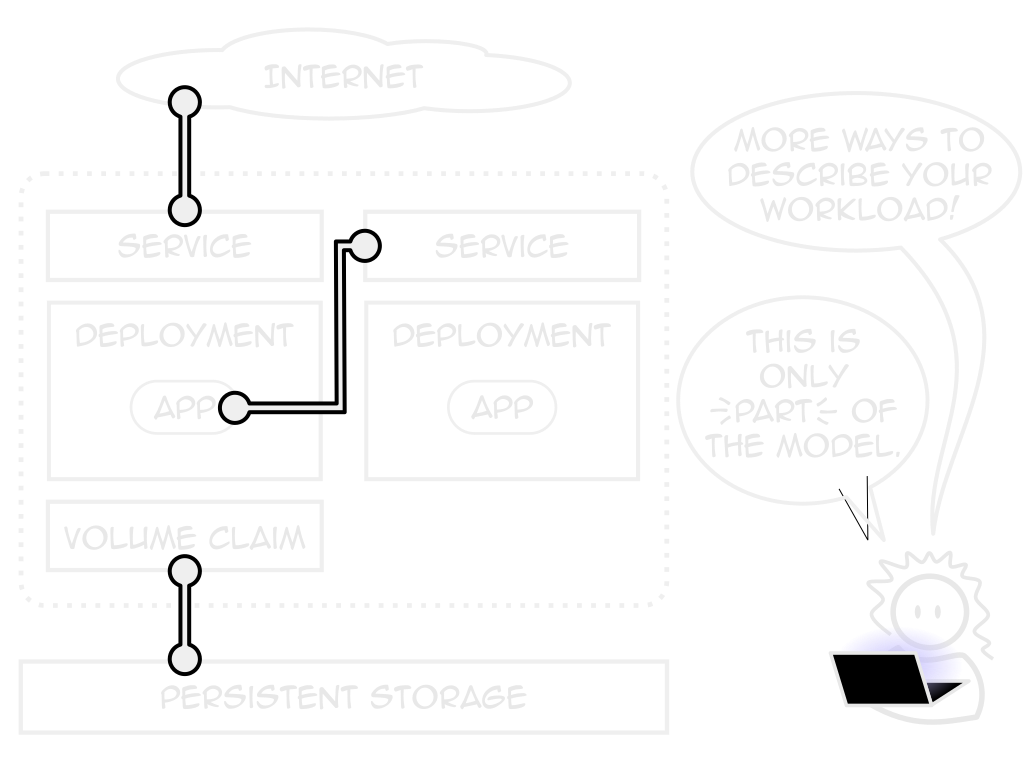

Expressive Power

Pods only one of many managable objects

Tailor k8s to your app, not the other way around

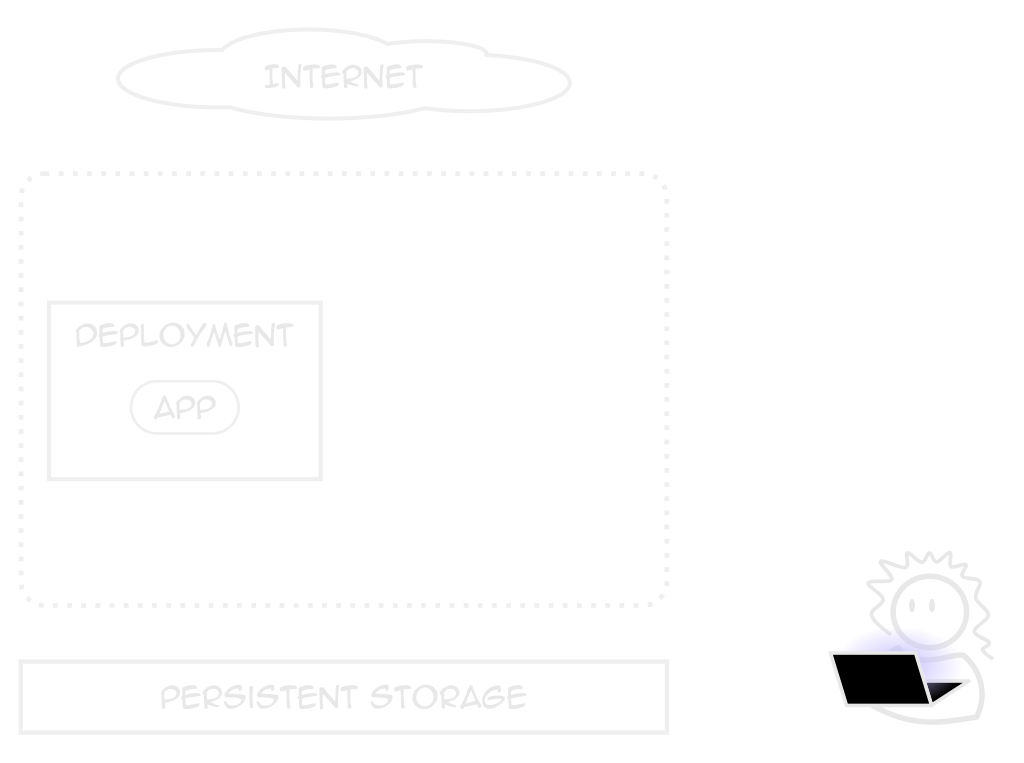

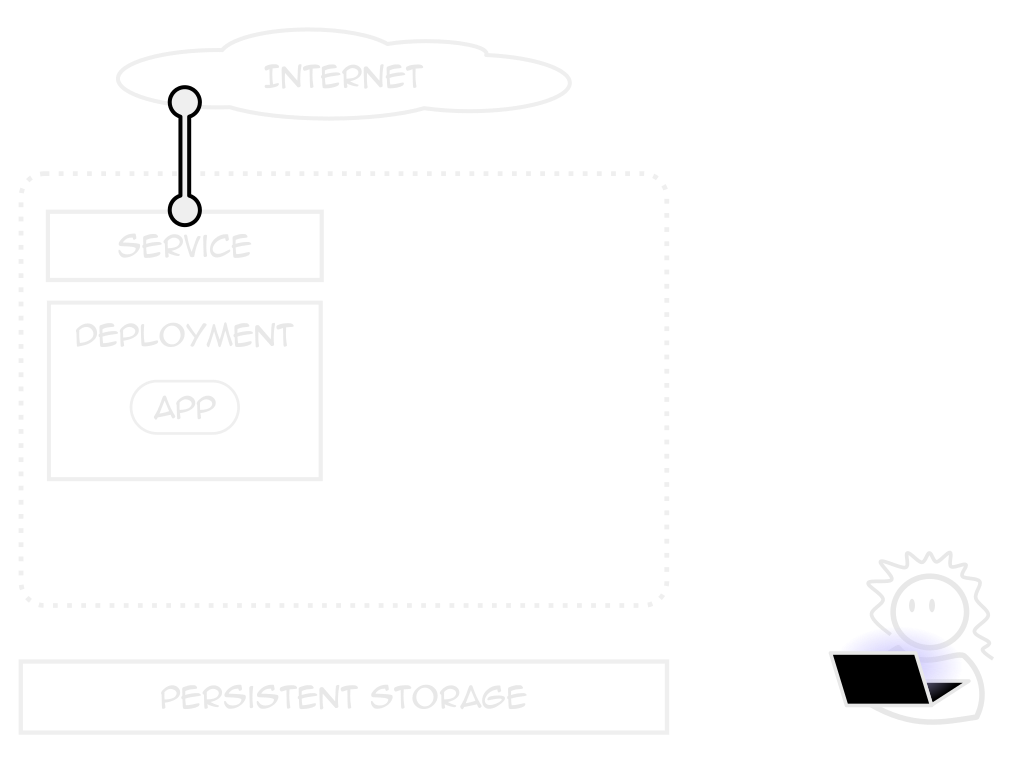

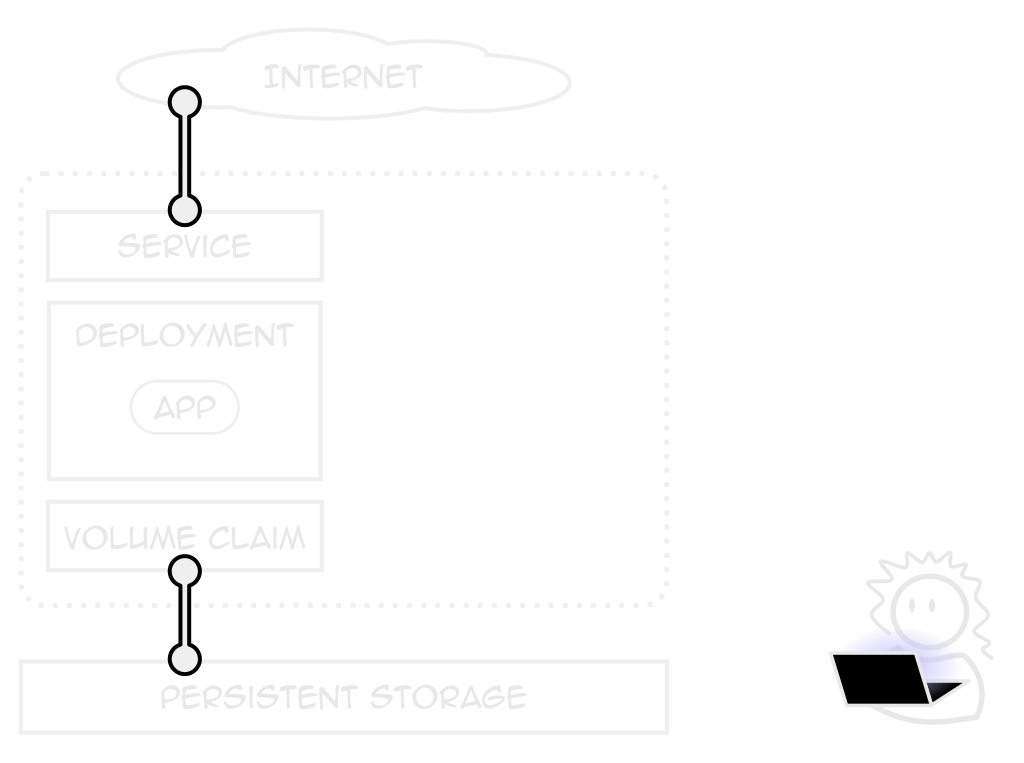

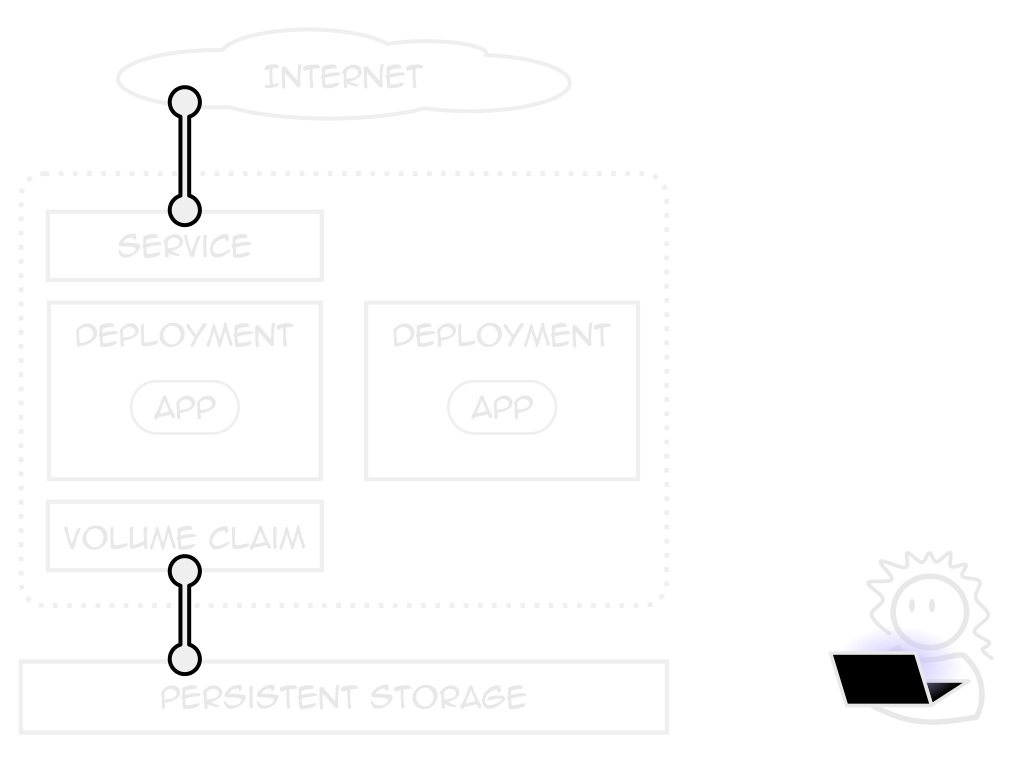

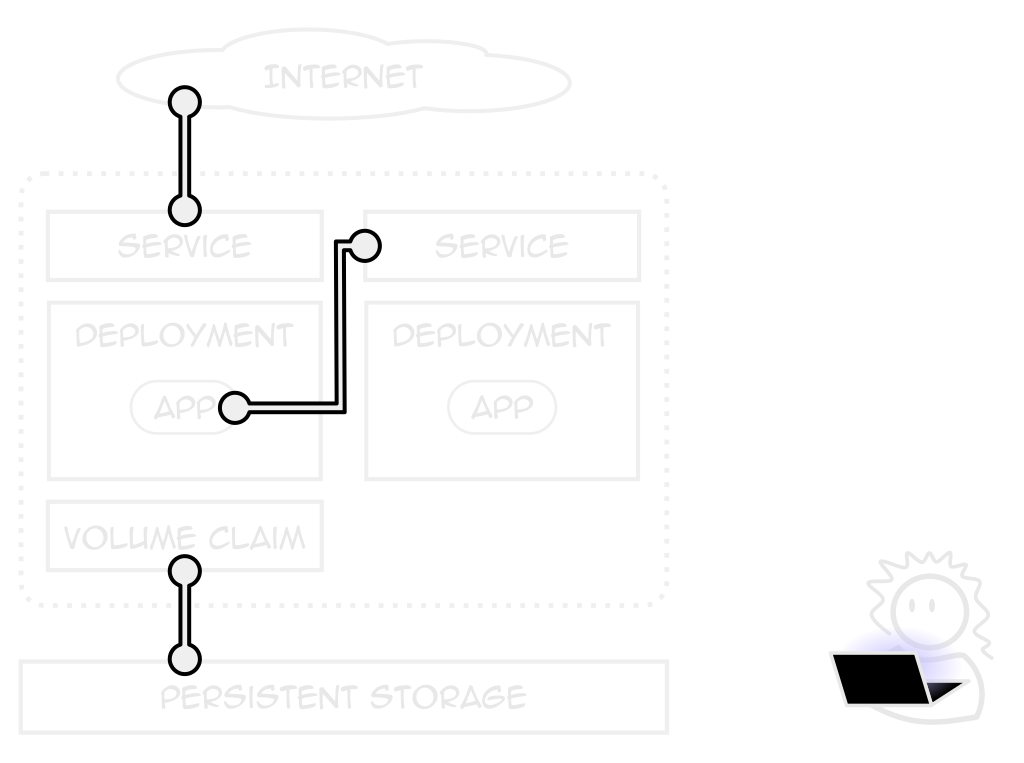

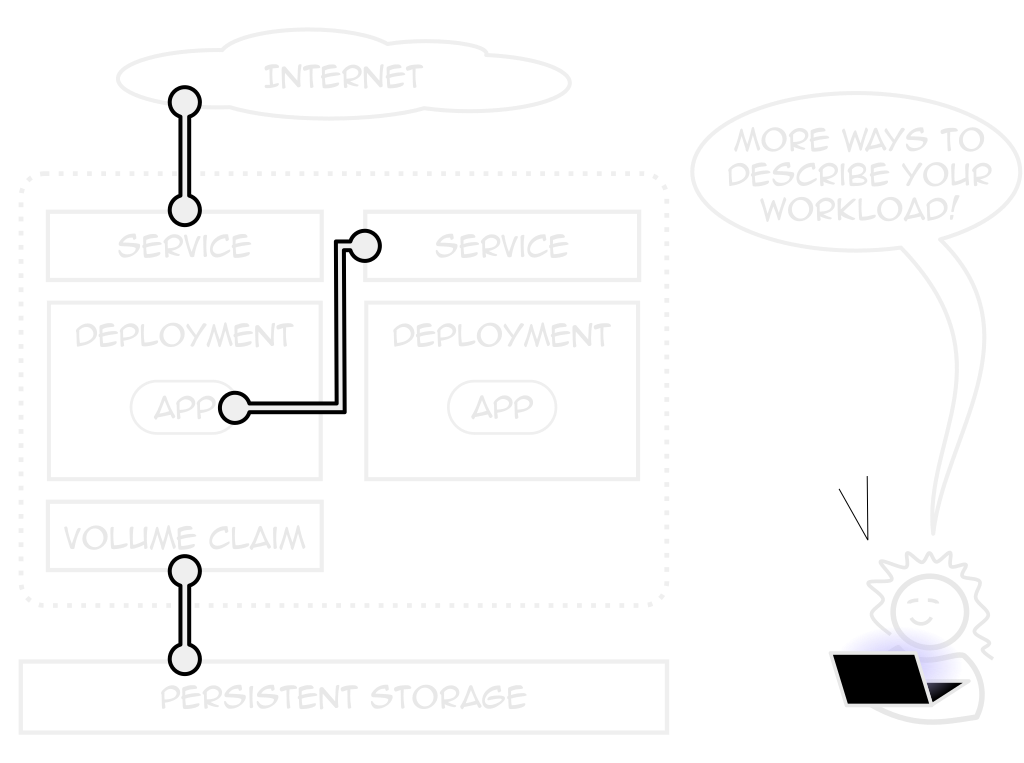

Deployments

Describes an application

Not just what containers to use

Replicas, health checks, placement

Services

A consistent application endpoint

Internal, external, load-balanced

volume Claim (pvc)

A request for persistent storage

How much, from where, sharability

Deploying objects

Declaritive YAML pushed to manager

YAML can be patched, diffed, even replaced

Getting to Kube-y station

Self-host

Internal on existing hardware...

...or 3rd-party VPS service

Less lock-in

No reliance on special APIs

Requires staff to manage cluster, set up storage

Storage still tricky

Traditionally-managed NFS

Managed kubernetes

Like a VPS, but for k8s workloads

Pay only for workers, storage baked-in

Providers

Amazon, Azure, DigitalOcean, Google GKE, others

Experiment! Each have pros & cons

Digitalocean

World-wide k8s provider

REST-based API

Digitalocean

World-wide k8s provider

REST-based API

The YAML is all around you

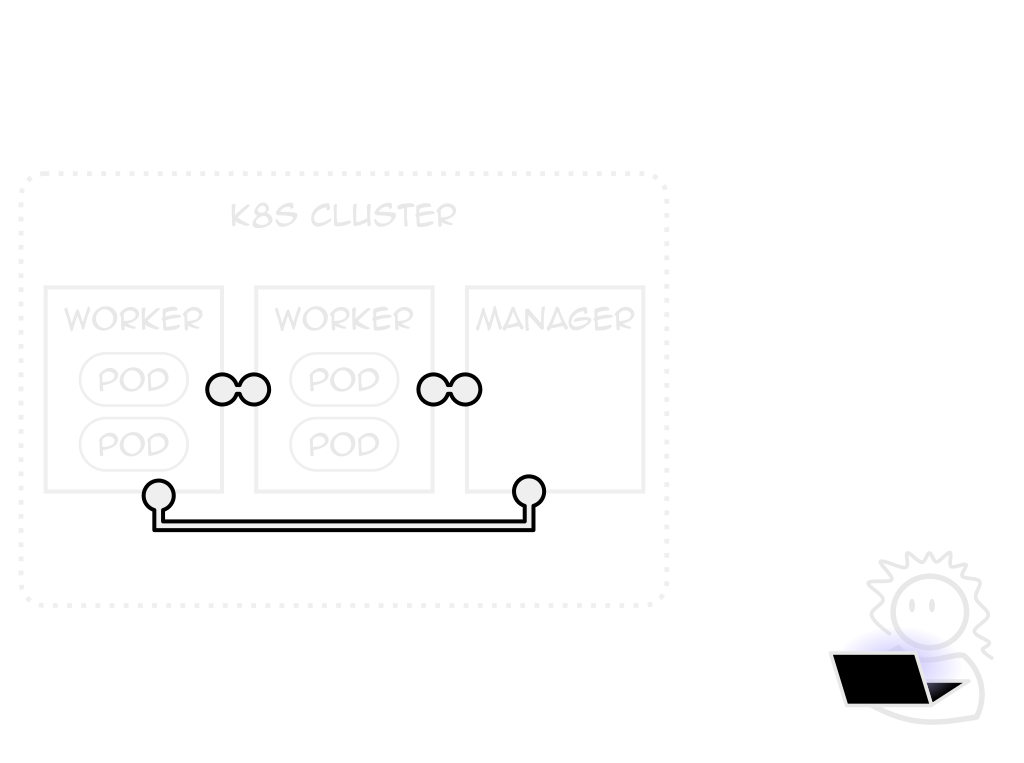

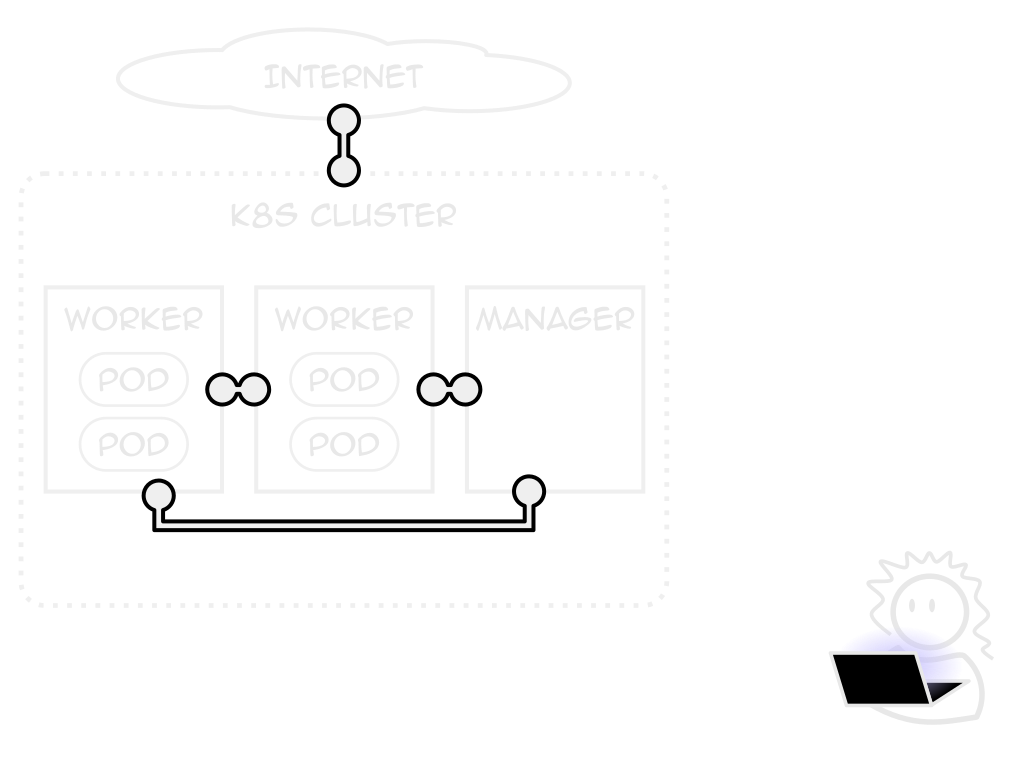

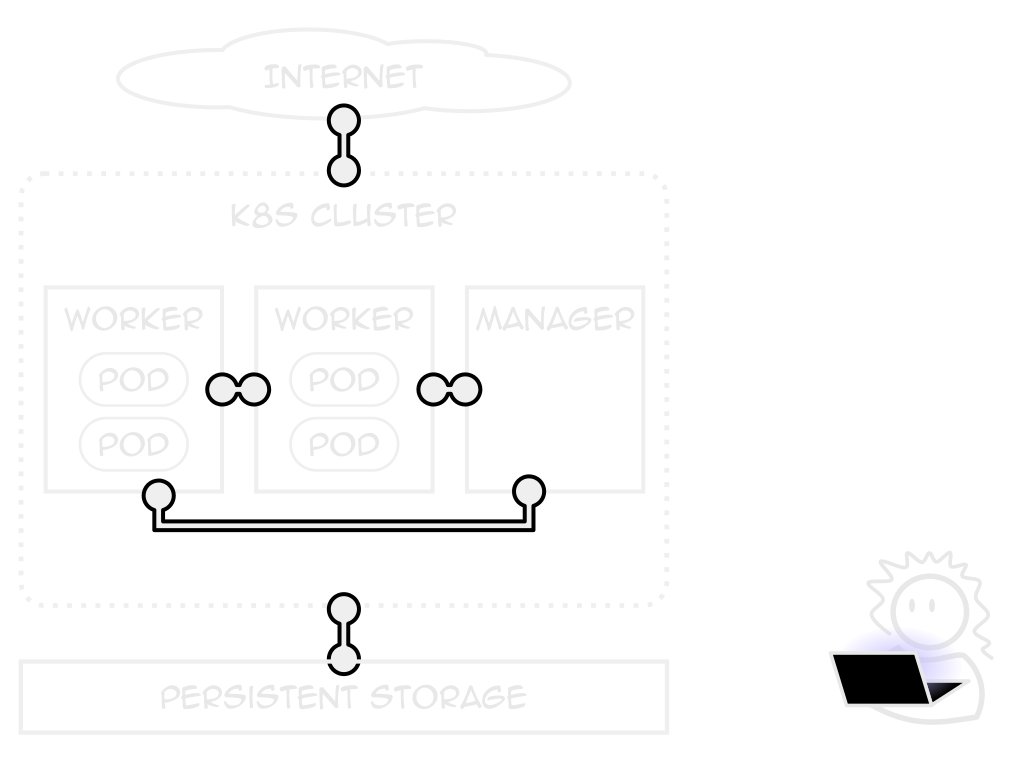

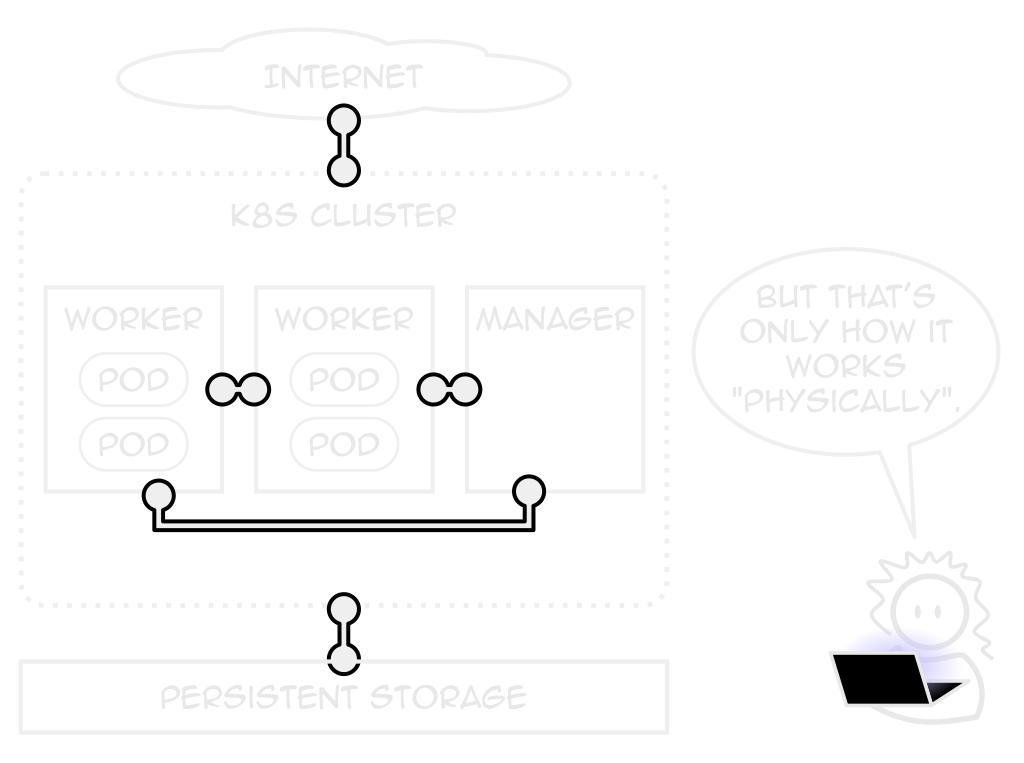

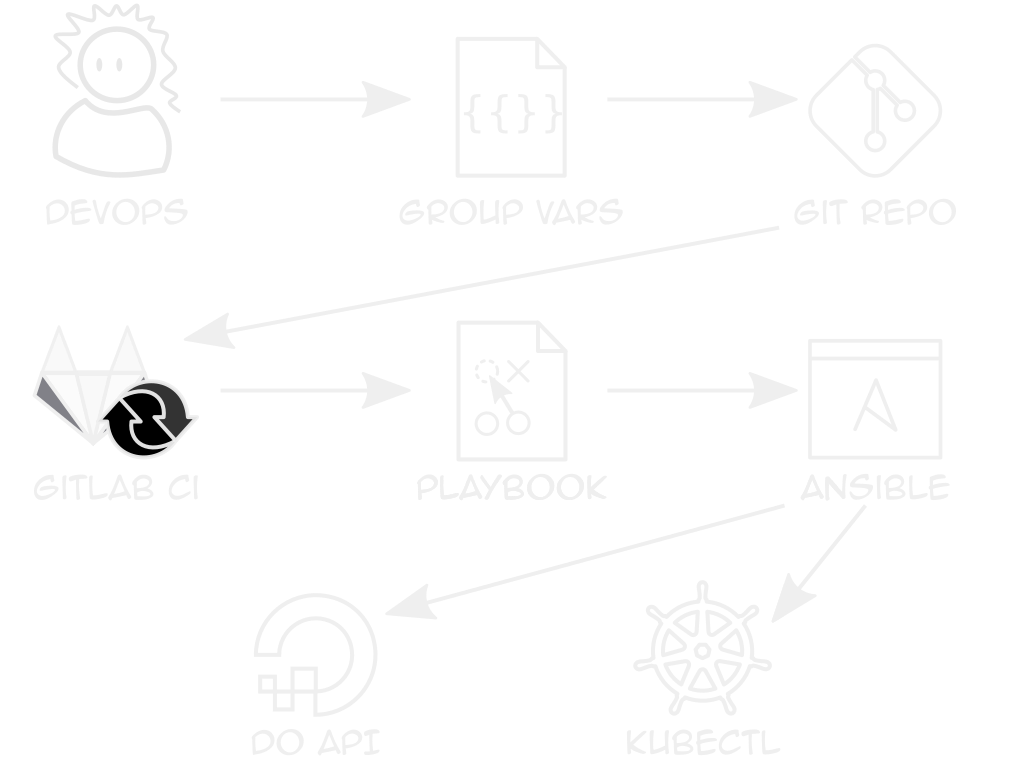

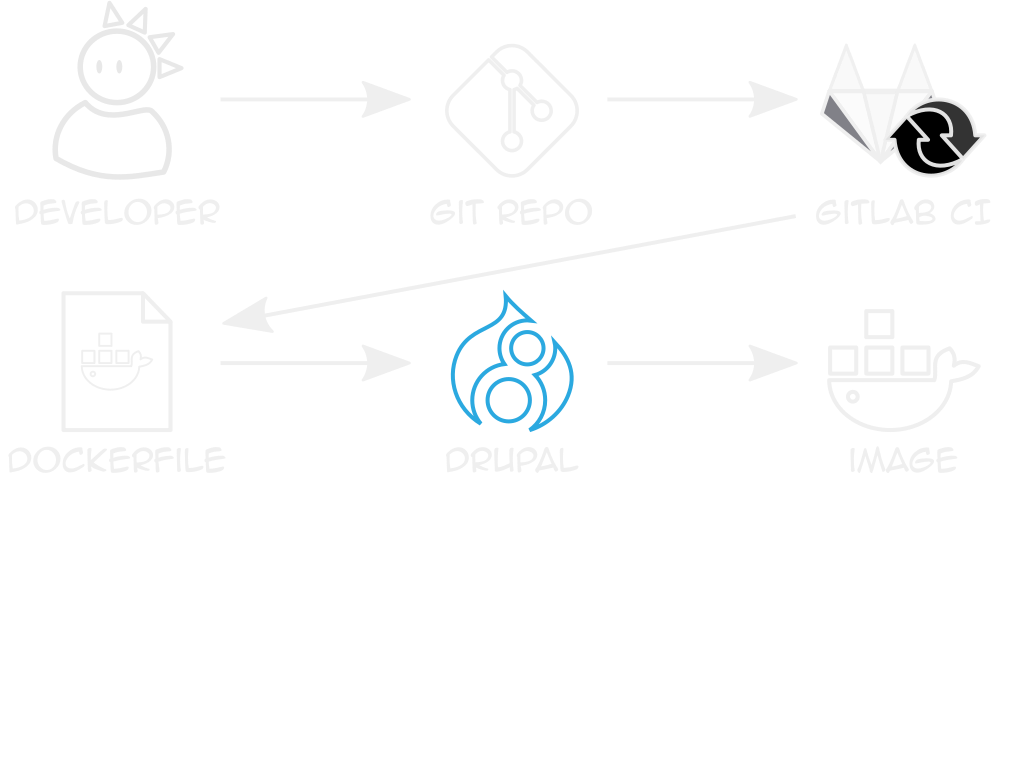

Architecture

Create 3-worker cluster of s-1vcpu-2gb nodes

Use TEN7's Flight Deck for containers

---

apiVersion: apps/v1 # k8s' API version for this doc.

kind: Deployment # The object type.

metadata:

name: web

spec:

replicas: 3

template:

metadata:

labels:

app: web

spec:

containers:

- image: ten7/flight-deck-web

imagePullPolicy: Always

name: web

ports:

- containerPort: 80

- image: "ten7/flight-deck-varnish"

imagePullPolicy: Always

name: varnish

ports:

- containerPort: 6081---

apiVersion: apps/v1

kind: Deployment

metadata:

name: web # The name of the deployment.

spec:

replicas: 3

template:

metadata:

labels:

app: web

spec:

containers:

- image: ten7/flight-deck-web

imagePullPolicy: Always

name: web

ports:

- containerPort: 80

- image: "ten7/flight-deck-varnish"

imagePullPolicy: Always

name: varnish

ports:

- containerPort: 6081---

apiVersion: apps/v1

kind: Deployment

metadata:

name: web

spec:

replicas: 3 # The number of replicas.

template:

metadata:

labels:

app: web # A key/value pair used by the service.

spec:

containers:

- image: ten7/flight-deck-web

imagePullPolicy: Always

name: web

ports:

- containerPort: 80

- image: "ten7/flight-deck-varnish"

imagePullPolicy: Always

name: varnish

ports:

- containerPort: 6081---

apiVersion: apps/v1

kind: Deployment

metadata:

name: web

spec:

replicas: 3

template:

metadata:

labels:

app: web

spec:

containers:

- image: ten7/flight-deck-web # The image to use.

imagePullPolicy: Always

name: web

ports:

- containerPort: 80

- image: "ten7/flight-deck-varnish" # The image to use.

imagePullPolicy: Always

name: varnish

ports:

- containerPort: 6081---

apiVersion: apps/v1

kind: Deployment

metadata:

name: web

spec:

replicas: 3

template:

metadata:

labels:

app: web

spec:

containers:

- image: ten7/flight-deck-web

imagePullPolicy: Always

name: web

ports:

- containerPort: 80 # Open port 80.

- image: "ten7/flight-deck-varnish"

imagePullPolicy: Always

name: varnish

ports:

- containerPort: 6081 # Open port 6081.---

apiVersion: v1

kind: Service

metadata:

name: web

spec:

ports:

- name: http

port: 80

- name: varnish

port: 6081

selector:

app: web

type: LoadBalancerSecrets

Defined as a their own object

Mounted into the deployment as a disk

---

apiVersion: v1

kind: Secret

metadata:

name: mysql

data:

drupal-db-name.txt: "ZHJ1cGFsX2Ri" # Must be

drupal-db-user.txt: "ZHJ1cGFs" # base64

drupal-db-pass.txt: "cGFzc3dvcmQ=" # encoded.---

apiVersion: apps/v1

kind: Deployment

metadata:

name: web

spec:

replicas: 3

template:

metadata:

labels:

app: web

spec:

volumes:

- name: vol-secrets-db # Create a "disk"

secret: # for our mysql secret.

secretName: mysql

containers:

- image: ten7/flight-deck-web

imagePullPolicy: Always

name: web

ports:

- containerPort: 80

volumeMounts:

- name: vol-secrets-db # Define where to mount

mountPath: /secrets/mysql # our DB credentials.

- image: "ten7/flight-deck-varnish"

imagePullPolicy: Always

name: varnish

ports:

- containerPort: 6081Provisioning pvcs

DigitalOcean, GKE, others: Single writer only!

Can't use for multiple web pods

Static files

...do not need block storage!

Object stores multi-writer by default

StatefulSets

A special kind of Deployment

Hostname assigned deterministically

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: memcache

spec:

selector:

matchLabels:

app: memcache # Must match the service selector!

serviceName: memcache

replicas: 3

template:

metadata:

labels:

app: memcache

spec:

containers:

- image: "memcached:1.5-alpine"

imagePullPolicy: Always

name: "memcache"

ports:

- containerPort: 11211

name: memcache

protocol: TCP---

apiVersion: v1

kind: Service

metadata:

name: memcache

spec:

clusterIP: None # Turn off load balancing!

ports:

- name: memcache

port: 11211

protocol: TCP

selector:

app: memcacheingress

k8s-managed edge load balancer

Maps domains names to k8s Services

Can terminate SSL

---

apiVersion: apps/v1

kind: Ingress

metadata:

name: "my-cluster-ingress"

annotations:

ingress.kubernetes.io/app-root: / # Fix rewrites.

ingress.kubernetes.io/affinity: cookie

ingress.kubernetes.io/enable-cors: "true" # Enable CORS.

ingress.kubernetes.io/proxy-body-size: "128m" # File uploads.

spec:

tls:

- hosts:

- "example.com"

secretName: ingress

rules:

- host: example.com

http:

paths:

- path: /

backend:

serviceName: web

servicePort: 6081---

apiVersion: apps/v1

kind: Ingress

metadata:

name: "my-cluster-ingress"

annotations:

ingress.kubernetes.io/app-root: /

ingress.kubernetes.io/affinity: cookie

ingress.kubernetes.io/enable-cors: "true"

ingress.kubernetes.io/proxy-body-size: "128m"

spec:

tls:

- hosts:

- "example.com" # Use HTTPS for this domain name.

secretName: ingress # The cert chain is stored here.

rules:

- host: example.com

http:

paths:

- path: /

backend:

serviceName: web

servicePort: 6081---

apiVersion: apps/v1

kind: Ingress

metadata:

name: "my-cluster-ingress"

annotations:

ingress.kubernetes.io/app-root: /

ingress.kubernetes.io/affinity: cookie

ingress.kubernetes.io/enable-cors: "true"

ingress.kubernetes.io/proxy-body-size: "128m"

spec:

tls:

- hosts:

- "example.com"

secretName: ingress

rules:

- host: example.com # For this domain name...

http:

paths:

- path: / # ...on this path...

backend:

serviceName: web # ...map to this service...

servicePort: 6081 # ...on this port.ingress tls secret

Provides the combined cert chain...

...and the private key.

---

apiVersion: v1

data:

tls.crt: "base64_encoded_cert_chain"

tls.key: "base64_encoded_private_key"

kind: Secret

metadata:

name: ingress

namespace: default

type: OpaqueCert Manager

Open source cert store, provisioner

Multi-tenancy

One site = one namespace

Allows consistent deployment, service names

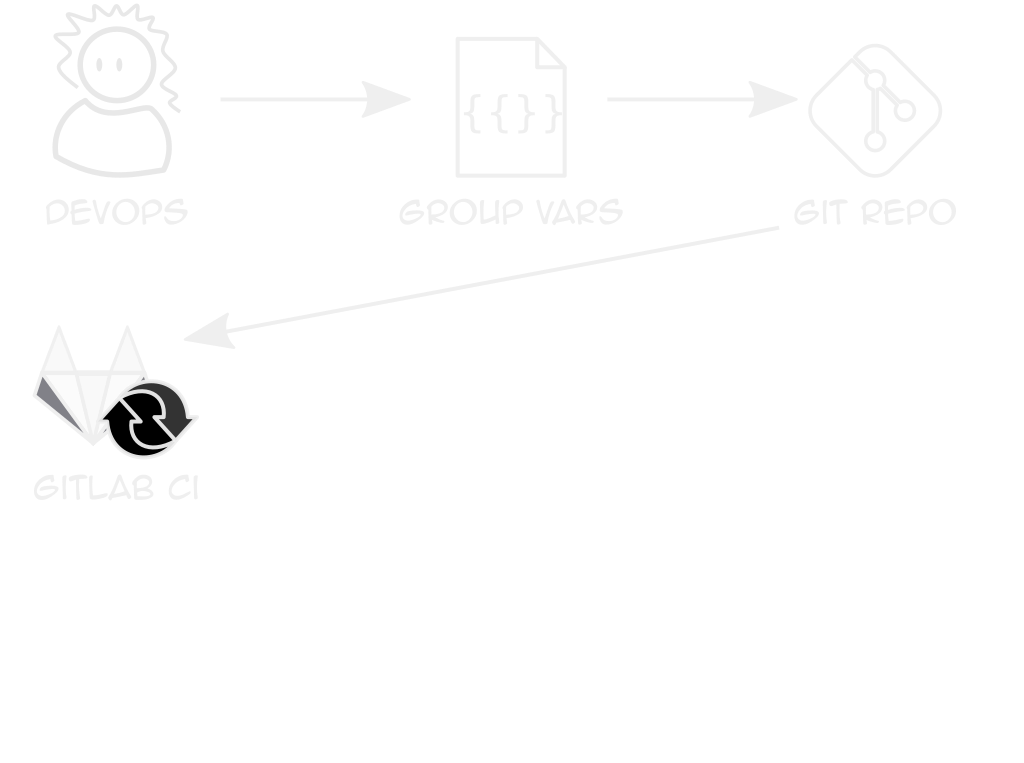

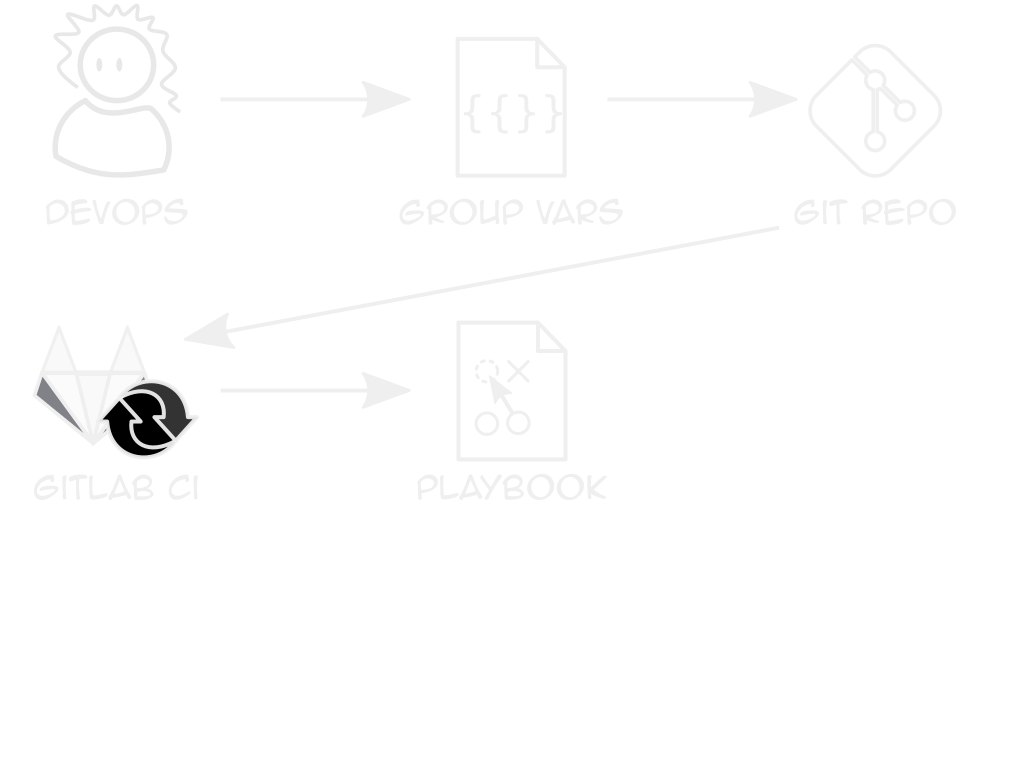

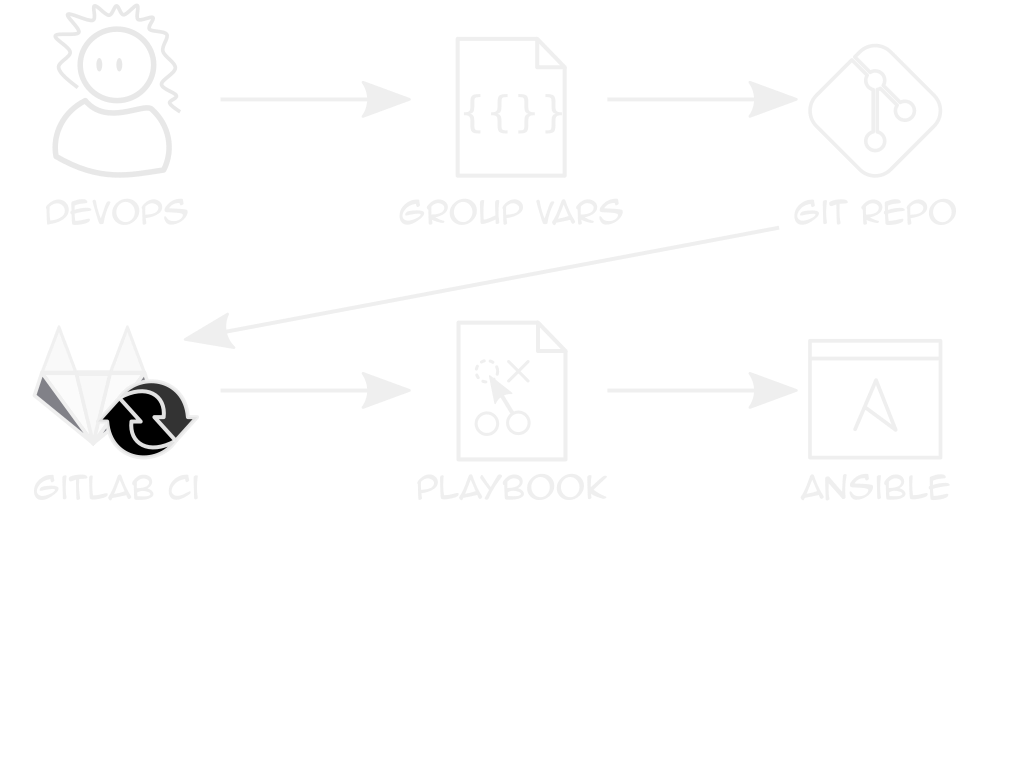

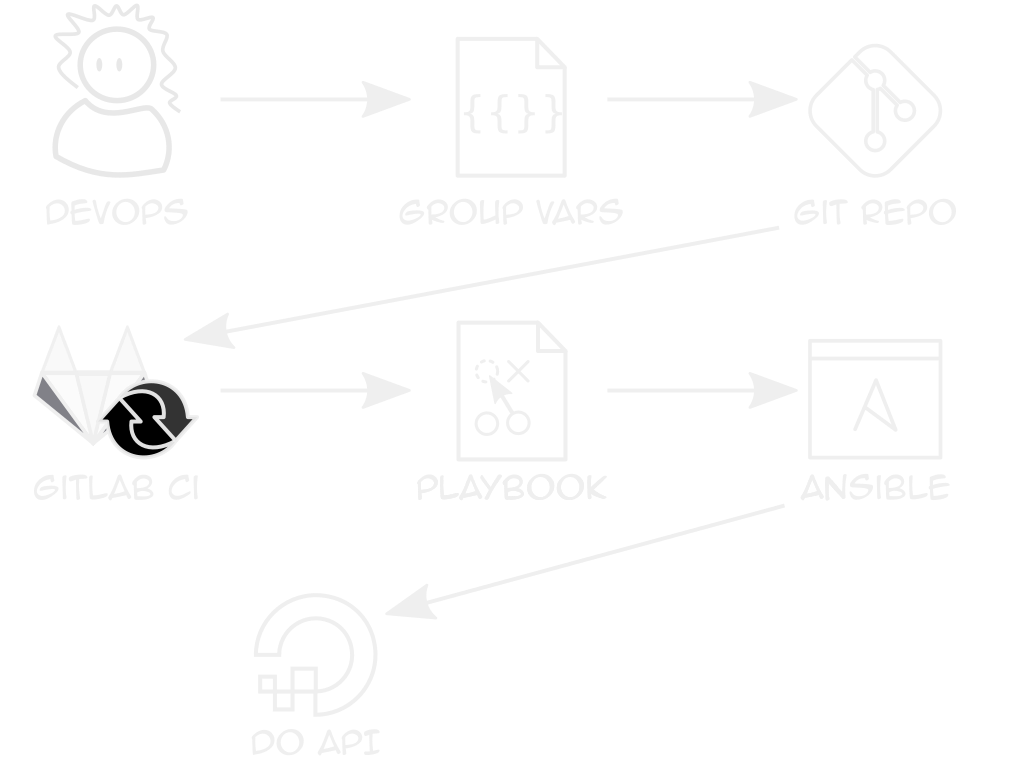

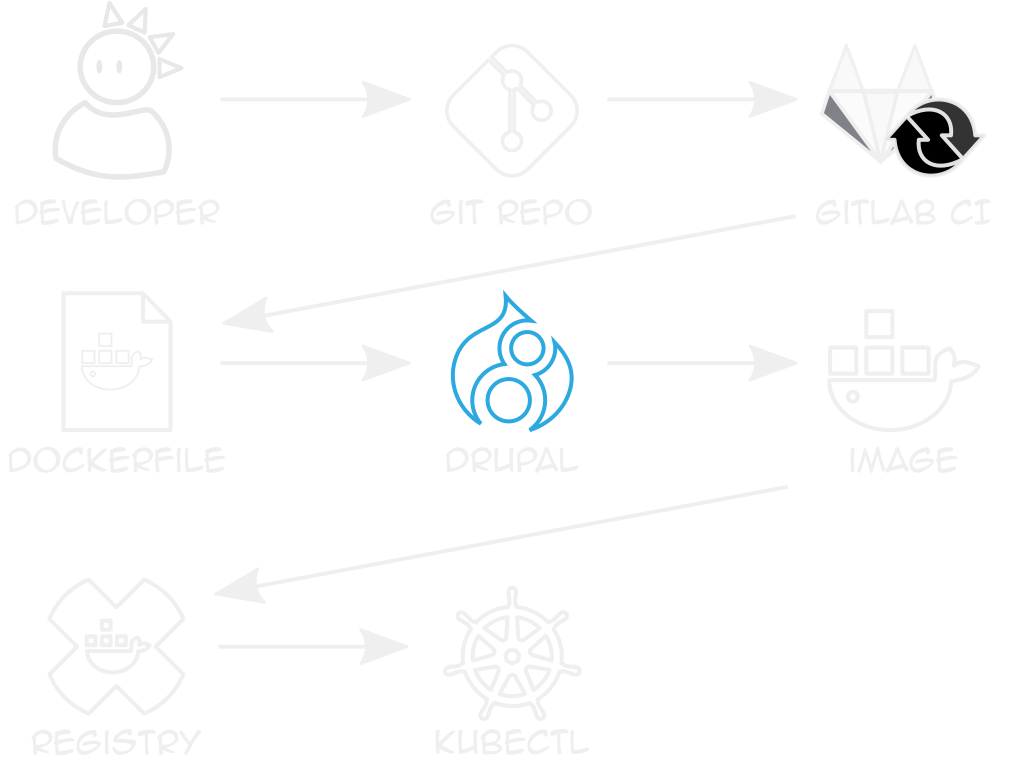

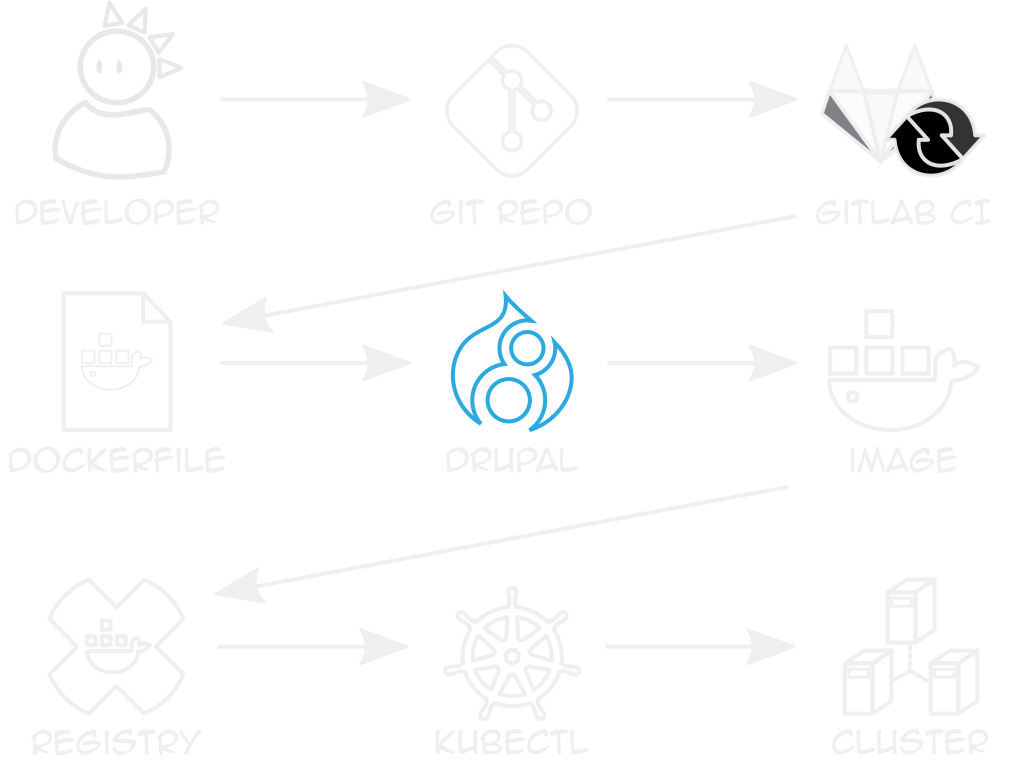

The buildchain of yavin

Deploying YAML

kubectl apply -f path/to/my_object.yml

Repeat for each YAML file to deploy

using namespaces

--namespace parameter on kubectl

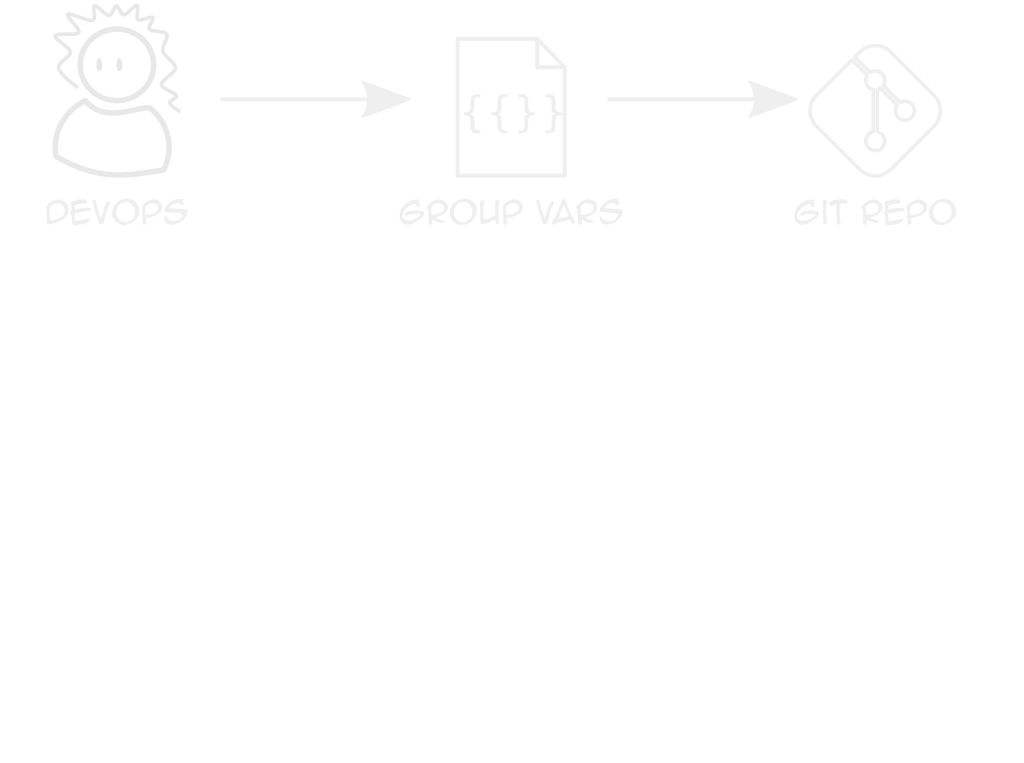

Repeatable Clusters

Clients only differ in sizes, logins, some features

Use Ansible vars + k8s module to deploy YAML

Authentication

Create a Personal Access Token

Passed to DigitalOcean API as HTTP header

Creating the cluster

ten7.digitalocean role on Ansible Galaxy

Handles scaling, node pools, and more

---

- hosts: all

vars:

digitalocean_api_token: "abcde1234567890"

digitalocean_clusters:

- name: "my_k8s_cluser"

region: "sfo2"

node_pools:

- size: "s-1vcpu-2gb"

count: 3

name: "web-pool"

labels:

- key: "app"

value: "web"

- size: "s-1vcpu-2gb"

count: 1

name: "db-pool"

labels:

- key: "app"

value: "database"

roles:

- ten7.digitaloceanAuthorizing kubectl

ten7.digitalocean_kubeconfig role

Gets the auth file from DO API

---

- hosts: all

vars:

digitalocean_kubeconfig:

cluster: "my_k8s_cluser"

kubeconfig: "/path/to/my/kubeconfig.yaml"

roles:

- ten7.digitalocean_kubeconfigWriting the k8s yaml

Creates secrets, deployments, services

examples on github

You're all clear, k8s!

You're all clear, k8s!

updating in place

Used for shared hosting, VPSes

SFTP, git deploy, some CI systems

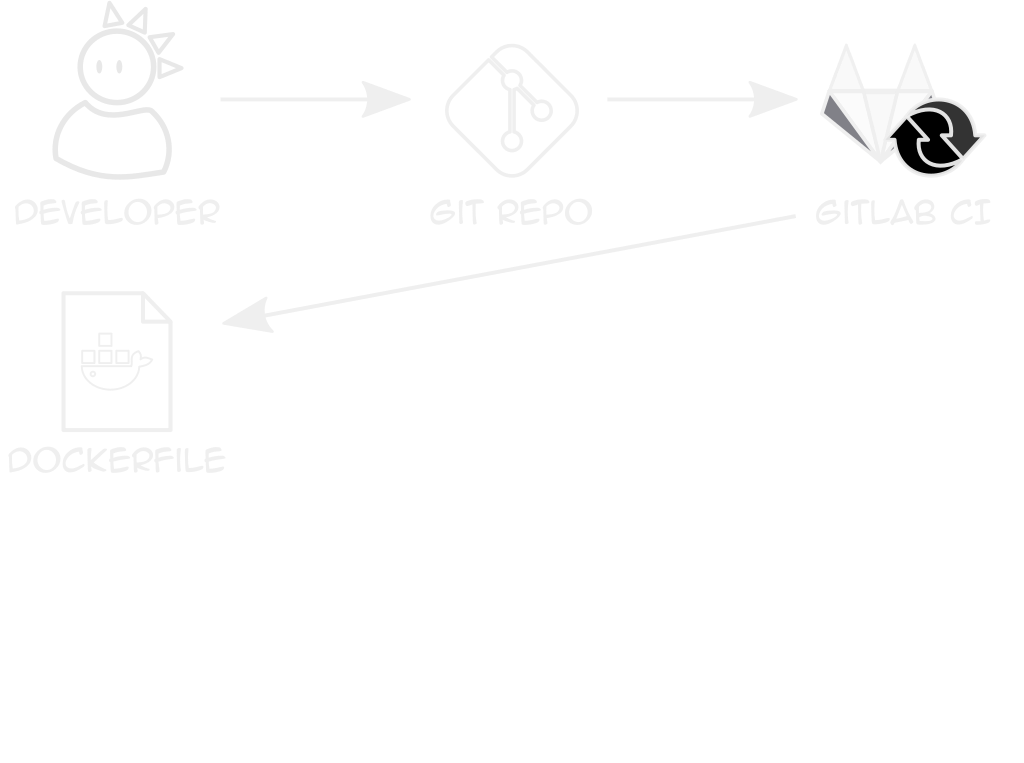

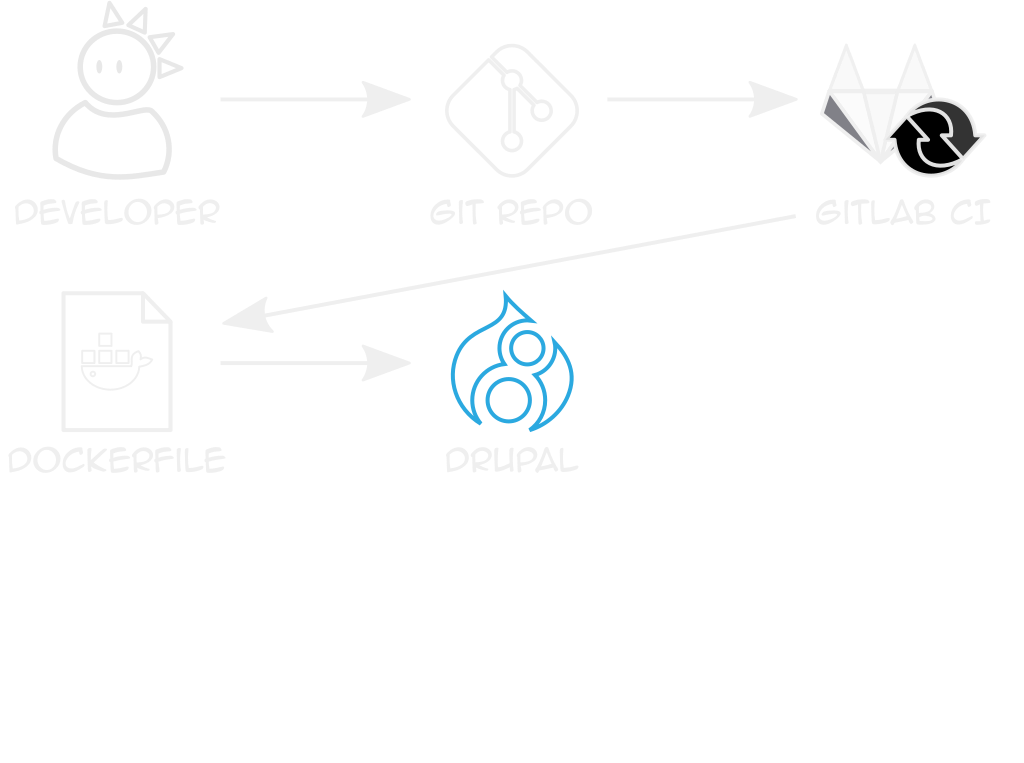

the site is the app

It should be the container!

example dockerfile

github.com/ten7/flight-deck-drupal

Creates directories, builds Drupal

Entrypoint writes settings.php

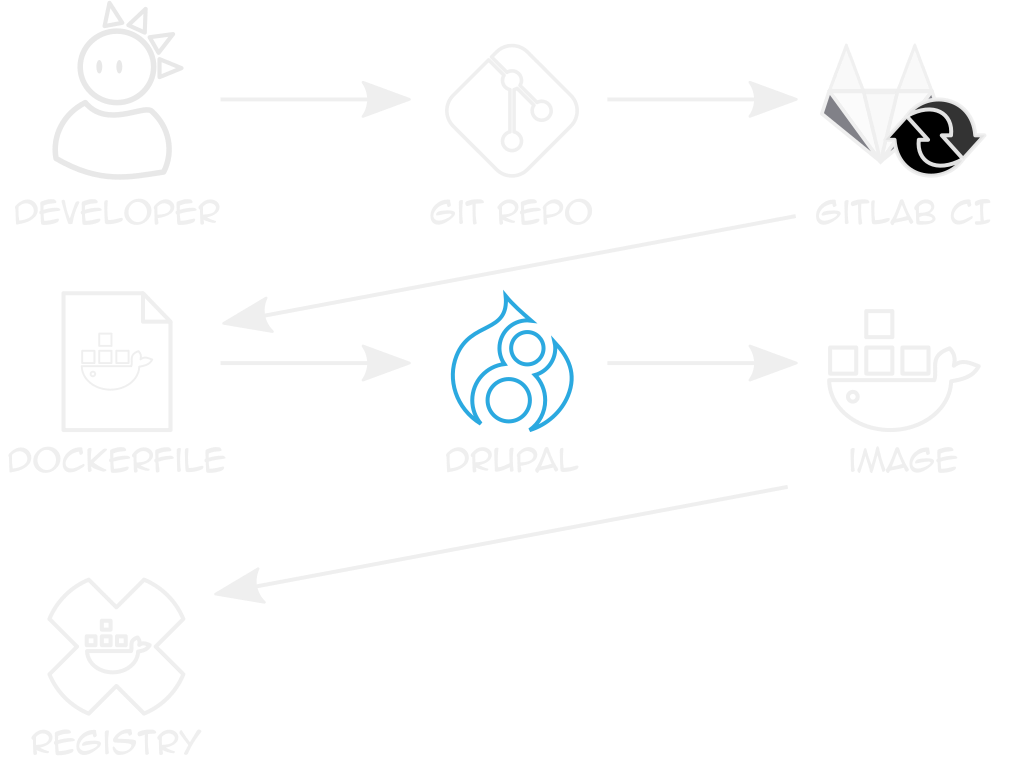

private registry

Stores built, site-specific images

k8s needs auth token pass in Secrets

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: web

labels:

app: web

spec:

replicas: 3

template:

metadata:

labels:

app: web

spec:

imagePullSecrets:

- name: my-registry-key # Needed to auth with registry

containers:

# Site-specific image, with tags as version

- image: myRegistry.tld:5000/example.com:1.0.7

imagePullPolicy: Always

name: web

ports:

- containerPort: 80

- image: "ten7/flight-deck-varnish"

imagePullPolicy: Always

name: varnish

ports:

- containerPort: 6081rolling update

Replaces running containers with new image

Performed sequentially, reduces outages

rolling update

Replaces running containers with new image

Performed sequentially, reduces outages

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: web

labels:

app: web

spec:

replicas: 3

strategy:

type: RollingUpdate # Use Rolling updates

rollingUpdate:

maxSurge: 1 # Allow 3 replicas during update

maxUnavailable: 1 # Only update 1 at a time

minReadySeconds: 5 # Wait 5s before updating the next

template:

metadata:

labels:

app: web

spec:

imagePullSecrets:

- name: my-registry-key

containers:

- image: myRegistry.tld:5000/example.com:1.0.7

imagePullPolicy: Always

name: web

ports:

- containerPort: 80

- image: "ten7/flight-deck-varnish"

imagePullPolicy: Always

name: varnish

ports:

- containerPort: 6081Further reading

Special thanks

This event!

Thank you!

socketwench.github.io/return-of-the-clustering